A visualization for the EM algorithm

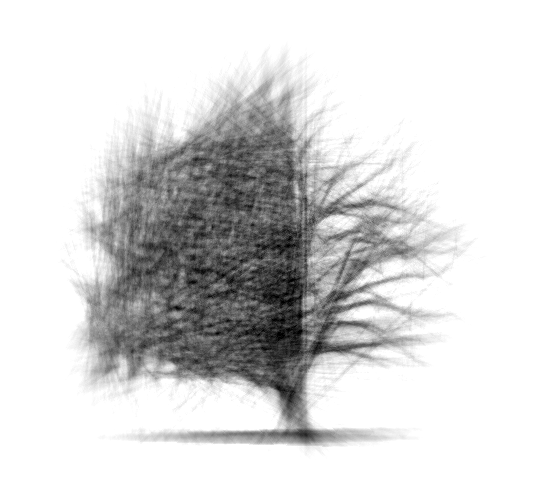

The following graph shows how the EM the steps of the EM algorithm for a Naive-Bayes setting in a population model. In the example, there are n1+n2 features each of which is an independent Bernouli variable. There are two document classes C1 and C2 occuring with equal probability. C1 takes value 1 in each of the first n1 features with probability p1 and takes value 1 in each of the n2 features with probability p2. C2 has complementary probabilities in each feature, that is, if C1 has probability p on a feature C2 has probability 1-p.

The graph shows the two stable fixed points corresponding to the probabilities of the classes. It also draws a separator for their regions of convergence. Clicking anywhere of the graph runs the EM algorithm for 100 steps with guesses for (p1,p2) begining from (x,y).